In the summer of 1958, Frank Rosenblatt was putting on a show. The Cornell University psychologist made the trip down the east coast to the Weather Bureau in Washington D.C.

Rosenblatt wasn’t meeting meteorologists. His partners were at the Office of Naval Research, a group of clever G-Men who needed access to the bureau’s IBM 704 computer.

In the computer bay on Independence Avenue, Rosenblatt and his backers invited reporters to watch as he fed the machine a deck of fifty punch-cards. The 704 whirred and buzzed, and began to guess the location of a square symbol on each.

After 50 practice runs, the machine could correctly puzzle out where each mark was by using what would become known as the ‘perceptron’ algorithm. Much like a young child, the room-sized computer learned to tell left from right.

Reporters were shocked. A wire story ran that afternoon — and the New York Times the following day — carrying the news that the ‘embryo [would one day] walk, talk, see, write, reproduce itself and be conscious of its existence.’

The perceptron’s operation was straightforward. At its core lay a set of weighted inputs that could be adjusted depending on whether the machine made correct or incorrect guesses. Just as we might learn language through correction and repetition, Rosenblatt believed his machine might classify objects and ‘perceive’ (hence the name) its surroundings.

Funded generously by the U.S. Navy, the algorithm was intended to loosely mimic the basic operations of biological neurons. Rosenblatt saw in his work the mechanical embodiment of Turing’s proposed ‘child machine’, a device that could acquire knowledge without explicit programming.

The spectacle worked. The press coverage unlocked a six figure grant for a purpose-built physical computer, the Mark I Perceptron, and catapulted Rosenblatt from obscure psychologist to Cold-War wunderkind.

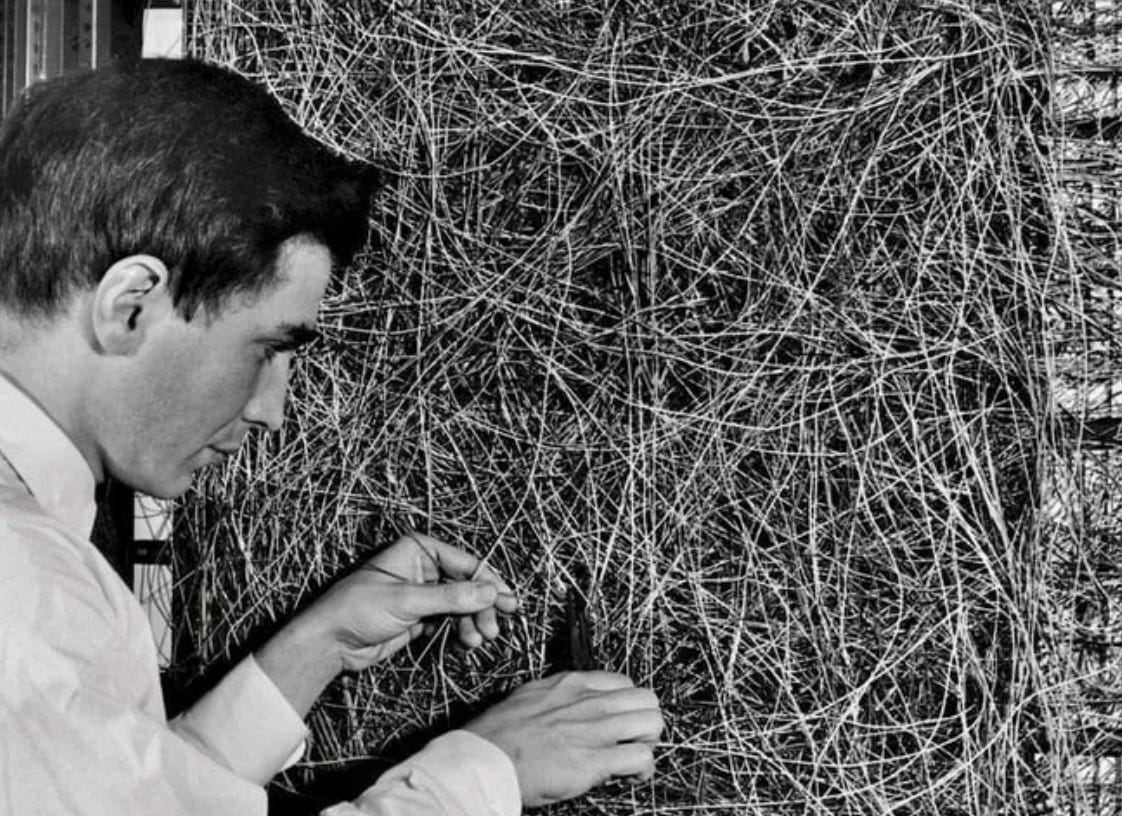

Rosenblatt’s team built the Mark I in the years that followed at Cornell Aeronautical Laboratory. Technicians soldered 400 photocells into a 20 × 20 ‘retina’ and connected them to 512 association units according to a table of random numbers.

The resulting tangle of wire looked like a copper bird nest, but it seemed to be able to perform some basic intelligence tasks (like identifying simplified silhouettes in target-recognition studies). It might not have been pretty, but it showed promise.

That was good news, because the military needed a win. The Soviet Union's launch of the Sputnik satellite in 1957 had begun to cast a long shadow over American scientific confidence.

In laboratories and government offices, anxiety gave way to urgency. Money flowed into universities and research institutes, each hoping to uncover technologies that could secure American supremacy.

An intelligent machine could put Uncle Sam back on the front foot. America might have been second to orbit, but it was going to win the race for thinking machines.

Boundary problems

The Navy’s ‘electronic brain’ was exactly what a nervous nation wanted. Within months, magazines speculated that computers like the Mark I would propel the United States closer to its pulp sci-fi dreams.

Grainy, high-contrast photos of the Mark I’s panels and flickering bulbs promised rationality, cleanliness, and progress. The machine provided an irresistible visual shorthand for a better future.

But not everyone bought it.

Marvin Minsky and his long time friend and collaborator Seymour Papert were amongst a growing group of researchers who felt the expectations for the perceptron project drastically overshot reality.

In 1969, the pair famously put the boot in by writing Perceptrons: An Introduction to Computational Geometry. Underneath the innocuous title lay a clear but troubling idea: single-layer perceptrons could not solve certain basic logical problems like XOR (exclusive-OR).

The XOR problem says that, given two inputs, the answer is ‘true’ if exactly one input is true — but false if both are true or both are false. The rub for Rosenblatt’s perceptron was that it relied on drawing a straight line through input data, neatly separating it into categories.

But XOR was impossible to neatly divide this way, like trying to separate diagonally opposed black and white squares with a single straight slice.

But while their argument was mathematically sound, Minsky and Papert also needed it to be right. At the time, the duo were embedded at MIT’s Project MAC where they worked on symbolic, rule-based systems (a very different way of building AI compared to Rosenblatt’s proto-connectionism).

After Minsky and Papert’s critique, the decline in enthusiasm for the algorithm was unforgiving. State funding in the US (and in the UK a few years later) shifted toward symbolic AI, which was seen as a safer investment given the perceived limitations of neural approaches.

I am wary of buying into the ‘AI winter’ meme given that a huge number of important contributions to the AI project happened within this period, but I will grant that it is a useful concept if we’re primarily interested in the amount of researchers in the field, the level of funding it sustains or how many column inches it grabs.

Assuming for the purposes of this post that we’re content to use these yardsticks, then what followed was a decade of cold as machine learning approaches like the perceptron fell out of fashion.

Not that they stayed there for long.

By the 1980s, it was Connectionism Summer™ when John Hopfield ‘brought neural nets back from the dead.’ A few years later, David Rumelhart, Geoffrey Hinton, and Ronald Williams finished the great resurrection when they popularised Paul Werbos’ backpropagation method.

The latter breakthrough showed that multilayer networks could overcome the limitations pointed out by Minsky and Papert. Unlike Rosenblatt’s single-layer perceptrons, the models of the 1980s contained multiple hidden layers that learned patterns from the errors of those that preceded them.

As we discussed in the first entry in this series, Rosenblatt’s work was significant because it showed how to put Warren McCulloch and Walter Pitts’ mathematical abstraction of a biological neuron into practice. Even early, limited successes showed that connectionism had potential.

When perceptrons got stuck in the mud, researchers were forced to find a solution. That solution led to backpropagating neural networks. When backpropagating neural networks proved scalable, we got deep learning and eventually ChatGPT.

But the through-line from 1958 to today's language models is also ideological. Every major breakthrough in AI has been underwritten by the same awe and anxiety that sent reporters scrambling during the Cold War.

Today's moment is functionally different, but the underlying narrative is remarkably familiar. Venture capital cash stands in for Navy grants, driven by the conviction that whoever builds the smartest machines wins the future.