Backpropagation is older than you think

AI Histories #6: Backprop begins in the Second World War, not with Werbos or Rumelhart

Backpropagation is the stuff that makes neural networks tick. Without it, it’s possible there’s no AI project as we know it today. No deep learning. No computer vision. No ChatGPT.

That’s because frontier AI systems are learning machines. They adjust to mimic patterns, improve by minimising error, and evolve to get better. Backprop helps them do that.

Using backprop, a network can make a prediction and calculate how far the prediction is from the true value. The technique takes that error and moves it backwards through the network, layer by layer, using a rule to figure out how much each connection contributed to the mistake.

Each weight is then adjusted via the magic of gradient descent so the network gets closer to the right answer next time.

For many years, backprop was thought of as the brain-child of David Rumelhart and Geoffrey Hinton. Their 1986 paper (with Ronald Williams) showed neural networks could learn internal features. It felt like a new day for machine learning researchers still reeling from Minsky and Papert’s takedown of the perceptron.

A little later, received wisdom shifted as credit for backprop went to a Harvard graduate student called Paul Werbos. In his 1974 thesis, Werbos framed the idea as ‘reverse‐mode’ optimisation for dynamic systems. Alas, symbolic AI was in vogue and his thesis gathered dust.

But as Werbos himself acknowledges, backprop’s lineage goes much further back.

Backpropagation is born of optimal control theory, the science of how to steer complex systems toward a goal in the most efficient way possible. Whether it’s a jet adjusting its thrust or a robot arm learning to move, the challenge is the same: to figure out how to act now in order to do better later.

Where did backprop come from?

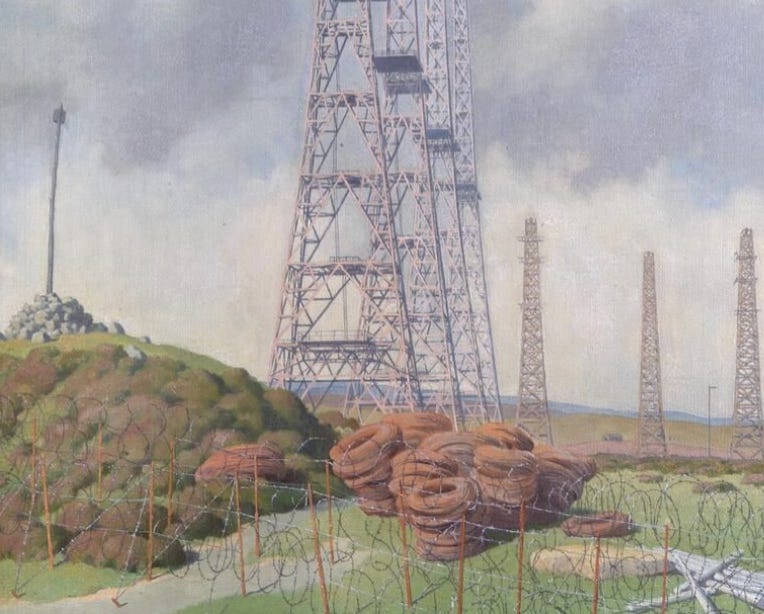

The genesis of optimal control theory can be traced to 1940s-era thinking about optimisation, which involved a constellation of techniques known as ‘operational research’ in Britain and ‘operations research’ in the United States.

During the Second World War, ‘operations research’ referred to work that puzzled out the most effective way of achieving a given military objective. The goal of the operations research was, as British radio direction pioneer Robert Watson-Watt put it, ‘to examine quantitatively whether the user organisation is getting from the operation of its equipment the best attainable contribution to its overall objective.’

With roots in the analysis of radar telemetry in late 1930s Britain, the field ‘diffused extraordinarily rapidly’ through British and American commands in the years in the Second World War.

Building on research conducted by the US military in the aftermath of the Second World War, American mathematical scientist George Bernard Dantzig worked on the military’s mechanisation efforts for the Pentagon.

In 1947, he responded to his assignment by conceiving a popular algorithm for linear programming that seeks to achieve the best outcome in a mathematical model where the requirements are represented by linear relationships.

Dantzig left the Pentagon in 1952 to take up a position in the Mathematics Department of the RAND Corporation in Santa Monica, California. At RAND, Dantzig gave a series of talks, including a lecture attended by Richard Bellman, who was finding approaches to multistage decision problems.

One oft-told story is that this lecture gave Bellman his eureka moment, though it was likely that the Soviet mathematician Lev Pontryagin’s work on the ‘maximum principle’ for taking a system from one state to another was just as important.

The techniques Bellman proceeded to develop over the 1950s came to be known as dynamic programming, an algorithmic approach for solving an optimisation problem by breaking the problem down into simpler subproblems. Reflecting on the development of dynamic programming, Bellman noted:

A number of mathematical models of dynamic programming type were analyzed using the calculus of variation. The treatment was not routine since we suffered either from the presence of constraints or from an excess of linearity. An interesting fact that emerged from this detailed scrutiny was that the way one utilized resources depended critically upon the level of these resources, and the time remaining in the process.

Bellman’s perception marked what some historians see as the transition between ‘classical control theory’ and what became known as optimal control theory. Where classical control was concerned with the stabilisation of a given system through continuous monitoring and modification, Bellman’s realisation involved thinking about a system as a temporally evolving sequence of states.

In the 1950s, the US military establishment was fretting about how to steer missiles that had minutes to correct their flight-paths. The problem was unforgiving. Researchers needed to know how to compute the precise adjustments needed to hit a moving target in real time, while accounting for changing wind, velocity, altitude, and fuel constraints.

The challenge led Richard Bellman to his big idea, or at least lots of smaller ones. Bellman decided to start at the final goal, then work backward to figure out the best decision at each step given the remaining time and resources.

This idea became a cornerstone of optimal control theory, formalised by Bellman under the name dynamic programming, which sought to find optimal strategies in complex, time-dependent systems.

By the 1960s, control engineers were combining this approach with what they called ‘adjoint equations’ thanks to Pontryagin and his collaborators in the USSR. The method involved nudging the final outcome and tracing how that change would have flowed backward through the system. By doing so, they could figure out how small adjustments earlier on (e.g. thrust, angle, or speed) would affect the final result.

In the closing years of the decade, Bryson and Ho published Applied Optimal Control. Page after page shows the same move. Pose a goal (say, minimise fuel while hitting Mach 3). Derive Euler–Lagrange equations. Run the gradient in reverse through the system. Update parameters and repeat.

In the 1970s, Finnish mathematician Seppo Linnainmaa was working on numerical stability. He wanted a way to get exact derivatives from a computer program by following the logic of the code itself. His solution was to record every step a computer took when calculating a function, then replay those steps in reverse to figure out how each input affected the output.

Bryson, Ho, and Linnainmaa became a mainstay of a new generation of machine learning researchers in the 1980s, with the trio appearing in what felt like every other paper during the height of the neural network revival.

As Yann LeCun put it in a paper edited by Geoffrey Hinton in 1988:

From a historical point of view, back-propagation had been used in the field of optimal control long before its application to connectionist systems. Nevertheless, the interpretation of back-propagation in the context of connectionist systems, as well as most related concepts are recent, and the historical and scientific importance of [Rumelhart et al., 1986] should not be overlooked. The concepts are new, if not the algorithm.

The story of backpropagation reminds us that scientific practice is often less about bolt-from-the-blue genius than about clever recycling. If we’re careful, we can trace the idea from Dantzig’s wartime military modelling, Bellman’s dynamic-programming rockets, Bryson & Ho’s calculus, Linnainmaa’s derivatives program, Werbos’ graduate-school thesis, and finally Rumelhart and Hinton’s psychological wrapping.

What modern AI calls a theory of learning is something like a travelling mathematical trick, one that has migrated across radar rooms, missile labs, and psychology departments. With each life it accumulates new metaphors and new meanings, but the basic idea is still the same.

The idea of backwards chaining to figure out what is being "misunderstood" is also an educational concept called "scaffolding" that allows a teacher to figure out what is not understood to correct it.