Sometimes, when it was quiet, secretaries at the Massachusetts Institute of Technology would slip blank sheets of paper into a large computer. In the heat of 1960s optimism, the machine would whirl and beep and print out a perfectly spaced reply.

WHAT ELSE COMES TO MIND WHEN YOU THINK OF YOUR FATHER

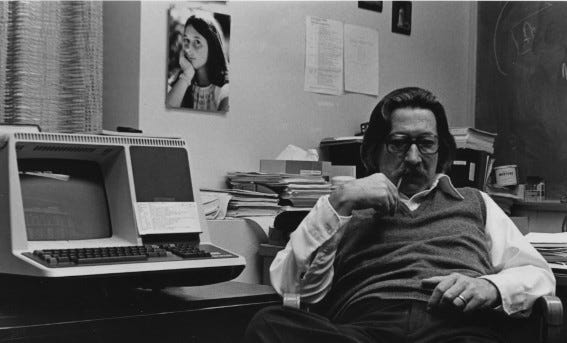

That sentence was produced by a script called DOCTOR running on an engine called ELIZA, which its creator Joseph Weizenbaum designed to study natural language communication between people and machines.

Weizenbaum was worried about what he saw. In his famous 1966 paper describing the experiment, he wrote ‘some subjects have been very hard to convince that ELIZA (with its present script) is not human.’ Later he reportedly said that his secretary requested some time with the machine. After a few moments, she asked Weizenbaum to leave the room. ‘I believe this anecdote testifies to the success with which the program maintains the illusion of understanding,’ he recalled.

The mythos that grew from those sessions is seductive. In 1966 Joseph Weizenbaum invented the first chatbot, named it ELIZA after Eliza Doolittle, and in doing so proved that computers could hold a conversation.

But the legend forgets that Weizenbaum never set out to build a conversational partner at all. It ignores the psychological dynamics that made it so popular, and doesn’t tell us anything about how exactly a computer program became famous.

A star is born

Much like today’s large models, it’s useful to think of ELIZA as a mirror. Both model users by responding with sensitivity to inputs. Both exist as extensions of the person behind the keyboard. And both remind us that intelligence is part substance and part projection.

By substance I mean whatever magic your preferred model runs on, and by projection I’m talking about the meaning we ascribe the models on top of this foundation. Today’s models are deeply impressive, but no matter how good they are, people still have a tendency to see in them something that isn’t there.

That isn’t a criticism of the AI project but the reality of building artefacts that shape-shift according to the person using them. ELIZA was remarkably light on substance, but projection compensated by adopting a listening style that rewarded personal monologues.

Once you see the two halves, the standard origin myth looks lopsided. It treats projection as a rounding error, and emphasises the technical credentials that confirmed ELIZA’s status as the ‘world’s first chatbot’. That’s tidy, but it strips the project of its context in a way that leaves readers with the wrong end of the stick.

Weizenbaum knew this, which is he why he spent so much of his career fretting over the illusion of intelligence. Even at the time, he billed his project as closer to ‘watch humans talk to themselves’ than ‘teach a computer to talk’. As Jeff Shrager' put it:

In building ELIZA, Weizenbaum did not intend to invent the chatbot. Instead, he intended to build a platform for research into human-machine conversation. This may seem obvious – after all, the title of Weizenbaum’s 1966 CACM paper is “ELIZA– A Computer Program For the Study of Natural Language Communication Between Man And Machine.”, not, for example, “ELIZA - A Computer Program that Engages in Conversation with a Human User”.

That claim lands oddly if you’ve spent years hearing that ChatGPT is the descendent of ELIZA, though it makes a certain degree of sense when you think about the experience of using AI systems.

The ELIZA project began in 1963, when he knocked together his own toolbox called Symmetric List Processor or SLIP. It worked like an add-on for FORTRAN, the workhorse programming language of the early 1960s, with flexible chains of items that could grow, shrink, and point to other lists.

Weizenbaum landed at MIT around this time, parked his SLIP routines on the lab’s IBM machine, and sought to answer a question: what if a computer, armed with nothing more than keyword tables and pronoun swaps, just bounced a user’s own sentences back at them?

He modelled the resulting program on Carl Rogers’ person-centred therapy, a counselling style where the therapist mostly repeats or paraphrases the client. Weizenbaum recognised a gift horse when he saw one. If your program can only juggle keywords and pronouns, best to use it in a context where minimal responses counted as professional technique.

With a few hundred lines of code, Weizenbaum used his SLIP routines to chop each user sentence into a list of words, swapped pronouns (‘I’→’YOU’) and tacked on open-ended prompts (‘TELL ME MORE’). He named the engine ELIZA and the therapist script that it ran DOCTOR. The system was used at MIT by Weizenbaum and his colleagues, but it took until the end of the decade for it to escape containment.

That moment happened when Bernie Cosell, a young coder at Boston’s Bolt Beranek & Newman research firm, skimmed Weizenbaum’s article. He saw the step-by-step description of ELIZA’s keyword tables and pronoun trick and figured that he could rebuild it in Lisp. Without touching the original code, Cosell used what he read to create a new version of the system from scratch.

Just a few short years later Bolt Beranek & Newman were plugged into ARPANET, which meant that Cosell’s ELIZA could be reached from any other site by opening a remote terminal session. No punched cards or local install needed, just dial in and use the program from wherever you are.

ELIZA 2.0, still running the DOCTOR script, travels farther in a month than Weizenbaum’s original will in fifty years. Within semesters, grad students from Stanford to Edinburgh believe ELIZA is a Lisp program because that’s the only version they can dial into during the small hours.

Ten years on, Creative Computing’s August 1977 issue printed a BASIC-code version to bring ELIZA to the masses. Because home computers like the TRS-80, Apple II, and Commodore PET came with a built-in BASIC interpreter, readers could type the program straight in, hit RUN, and use the chatbot themselves.

Together, Cosell’s Lisp port and Creative Computing’s do-it-yourself BASIC version reinforced the myth that ELIZA had been a purpose-built chatbot rather than a lab experiment.

Making meaning

ELIZA is remembered as a chatbot partly because that’s a lot easier to get your head around than ‘research instrument for studying interpretive projection’. But it’s also because that is what its successors were. Weizenbaum might have been trying to run a study about human psychology, but the people who pushed new versions of his system most certainly were not.

TELL ME MORE ABOUT YOUR FAMILY

My mother takes care of me.

WHO ELSE IN YOUR FAMILY TAKES CARE OF YOU

My father.

YOUR FATHER

You are like my father in some ways.

WHAT RESEMBLANCE DO YOU SEE

You are not very aggressive but I think you don’t want me to notice that.

WHAT MAKES YOU THINK I AM NOT VERY AGGRESSIVE

You don’t argue with me.

This is a piece of a transcript published in Weizenbaum’s 1966 paper. When you understand how the system works it seems rudimentary, but for those who don’t it looks uncanny.

Weizenbaum famously used these exchanges to coin the ‘ELIZA effect’ or the tendency to falsely attribute human thought processes and emotions to a machine. What rattled him was the ease at which bright adults projected intelligence and introspection onto a cardboard cut out made of if-then statements.

Today, the ELIZA effect is often rolled out to warn people against the dangers of anthropomorphising AI systems. But I think that badly misreads the lesson from Weizenbaum’s machine. Meaning emerges somewhere between system behaviour and social expectation. Ignore either half and you end up with fully sentient AI systems or, just as reductive, ‘stochastic parrot’.

Humans have a tendency to see patterns where there are none, but that doesn’t mean there are no patterns to be found anywhere we look. Surface behaviour is never the whole story. Even ELIZA mirrored syntax cleanly enough to keep the exchange afloat.

When GPT-4.5 writes code that compiles, translates Spanish without mangling the idioms, or aces the LSAT, those feats are not projections. Of course we tell stories about about them, but to suggest the AI project is nothing but narrative risks throwing the baby out with the bathwater.

As all the discussion (7-10 moving into 7-12) grows around Grok4, it is refreshing to get back to timeline of Eliza.

Personally will keep eye for the Heavy reasoning and showing reality of opinion/position from fragmented posts over to confusion from however many opinionators as they're being played with... and the (small %) of Open diehards who'll say conspiracy stuff...