Read on for bits on global compute resources, AI safety in China, and “nuanced” approaches to openness for this week’s edition (plus the usual bag of papers and jobs below). As always, it’s hp464@cam.ac.uk if you want to share something for next week’s post or get in touch for anything else.

Three things

1. The compute divide

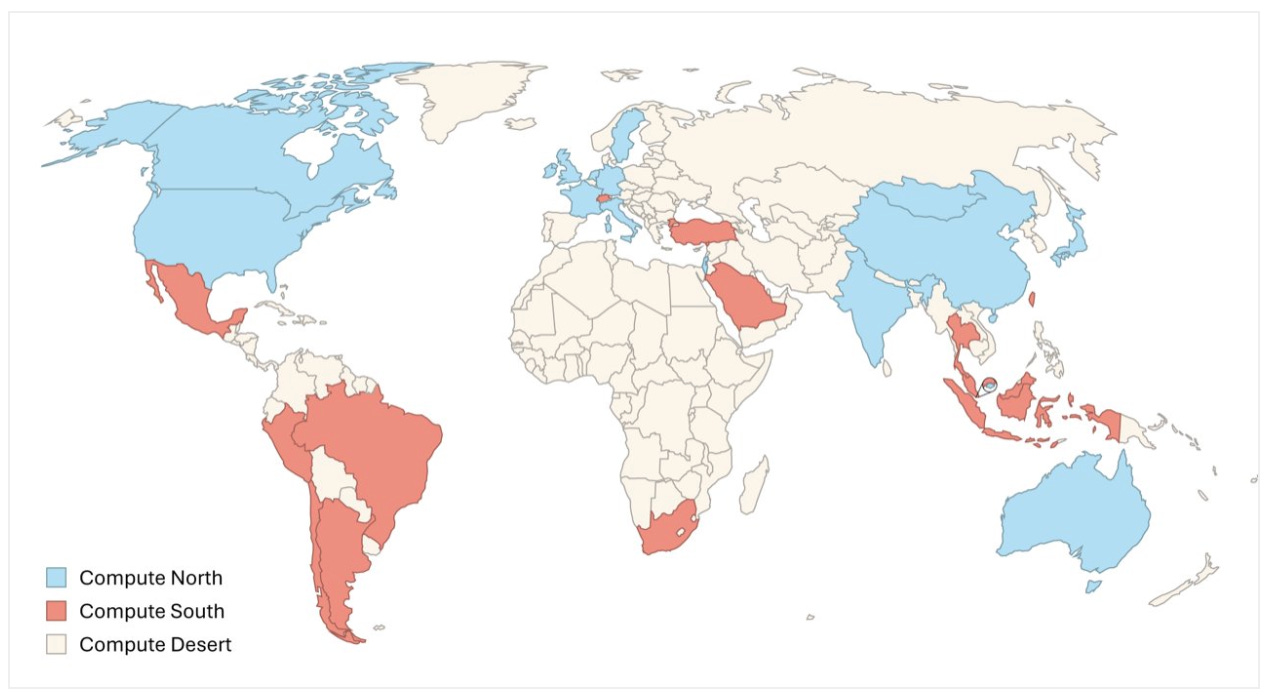

Researchers from the University of Oxford and the Australian National University looked at the state of compute infrastructure development around the world. The work split countries into smaller GPU enabled “regions” (in this context, datacenter clusters housing lots of GPUs) and counted how many of those regions were present in each country.

They found that, of the 187 GPU regions identified in the study, 36 were in China, 34 in the EU, and 27 in the US. As for the smaller players, India and Australia had 9 a piece, Japan also holds 9, while the UK and Canada counted 6 regions each. Factoring in those countries that have some compute infrastructure—as well as those that have none—the authors suggest that the world can be divided into a “Compute North” with the most advanced chips, a “Compute South” with older chips good for running (but not training) AI systems, and “Compute Deserts” with no access to chips whatsoever.

So, what to take from this? Speaking to Time, the authors said the concentration of the most powerful chips in a handful of countries risks locking out many states from “shaping AI research and development.” In essence, the concern is that a nucleus of GPU rich countries may emerge as AI rule makers, while the rest of the world must be content with positions as AI rule takers.

That is to say: no sovereign compute means a state must deploy foreign-made models and draw on overseas resources for running those models in their country. For the most part that might not necessarily be a bad thing, but I can certainly see some instances—for example, maintaining essential public service delivery reliant on AI—where it might be problematic in the future.

2. AI safety in China

A new report from the Carnegie Endowment for International Peace argued that “a growing number of research papers, public statements, and government documents suggest that China is treating AI safety as an increasingly urgent concern, one worthy of significant technical investment and potential regulatory interventions.”

The piece says that China’s interest in AI safety, much like in Europe and the US, was buoyed by the release of ChatGPT at the end of 2022 (with the author collecting a string of examples to make a case). The most consequential of these comes from the Third Plenum in July, when an English translation of a text released by China’s leadership said the country ought to “institute oversight systems to ensure the safety of artificial intelligence.” As the report notes, though, an alternative translation of the text said the clause called for China to “establish an AI safety supervision and regulation system.”

Whatever the case, interest in AI safety in China seems to be on the rise. The Economist, which helpfully asks “is Xi Jinping an AI doomer?”, said that US and Chinese officials have been privately discussing AI safety (though also noted that China’s political elite is “split” on the issue).

Finally, if you’re interested in AI safety in China, you should check out the work of the folks at Concordia. They do lots of good stuff, including an excellent ‘State of AI Safety in China’ report that takes stock of frontier AI governance in the country.

3. Dimensions of openness

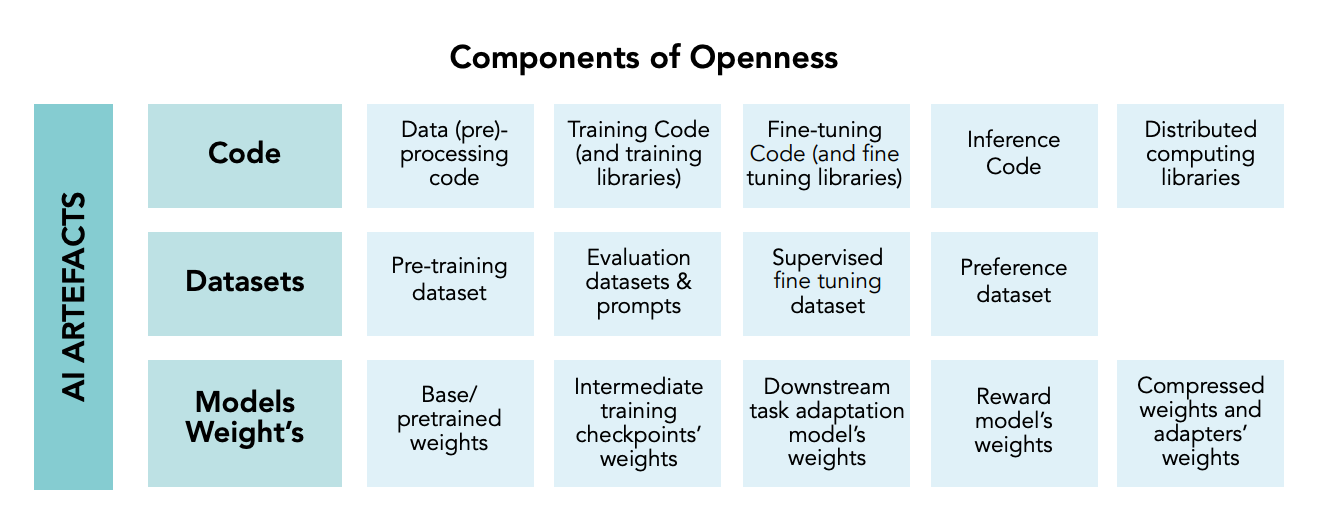

UK think tank Demos released a paper taking stock of the AI policy issue that just doesn’t go away: what do to about open source models. Many readers will no doubt have heard lots about this particular debate, which, at the most basic level, pits concerns about safety against drives to prevent the consolidation of power amongst a handful of major developers.

One problem is that there isn’t really a widely accepted definition of ‘openness’, which researchers—given the woolly nature of the term—are increasingly viewing as a gradient rather than a binary. To that end, the report outlines three ‘dimensions of openness’ and the ‘components of openness’ associated with them. They begin with artefacts, which (as above) are things like types of code, information about datasets, and data about model weights.

Next up is documentation like datasheet components, various pieces of information that might be included within a model card accompanying a release, and any additional commentary shared as part of the publication process. Finally, we have measures that govern how the model can be used (called ‘distribution’ in the report), which include elements such as usage licenses, access mechanisms, and policies that set limits on what people are allowed to do with models.

As for policy recommendations, the work primarily calls for measures to determine the marginal risk or benefit of open source models versus their closed counterparts, and describes a raft of capacity-building programmes to encourage research into these questions.

One idea on the punchier side is that regulators ought to “establish openness as a condition for transferring liability downstream” from developer to deployer or user. This would, depending on the fine print, see a developer shed responsibility for how the riskiest models (at least on paper) are used. I’ll write something more about this in the not too distant future, but for now you can read more on page 30 of the report.

Best of the rest

Friday 30 August

Assessing Large Language Models for Online Extremism Research: Identification, Explanation, and New Knowledge (arXiv)

Ask Claude? Amazon turns to Anthropic's AI for Alexa revamp (Reuters)

Smaller, Weaker, Yet Better: Training LLM Reasoners via Compute-Optimal Sampling (arXiv)

California's "AI Safety" Bill Will Have Global Effects (IEEE Spectrum)

LLMs generate structurally realistic social networks but overestimate political homophily (arXiv)

Thursday 29 August

U.S. AI Safety Institute Signs Agreements Regarding AI Safety Research, Testing and Evaluation With Anthropic and OpenAI (NIST)

UK reshapes its AI strategy under pressure to cut costs (Reuters)

Persuasion Games using Large Language Models (arXiv)

With 10x growth since 2023, Llama is the leading engine of AI innovation (Meta)

What Machine Learning Tells Us About the Mathematical Structure of Concepts (arXiv)

Wednesday 28 August

Anthropic releases Claude’s system prompt (Anthropic)

OpenAI in talks to raise funding that would value it at more than $100 billion (CNBC)

Signal Is More Than Encrypted Messaging. Under Meredith Whittaker, It’s Out to Prove Surveillance Capitalism Wrong (WIRED)

EmoAttack: Utilizing Emotional Voice Conversion for Speech Backdoor Attacks on Deep Speech Classification Models (arXiv)

Teachers to get more trustworthy AI tech as generative tools learn from new bank of lesson plans and curriculums, helping them mark homework and save time (UK Gov)

Tuesday 27 August

AI Could One Day Engineer a Pandemic, Experts Warn (Time)

AI Regulation’s Champions Can Seize Common Ground—or Be Swept Aside (Lawfare)

California’s Draft AI Law Would Protect More Than Just People (Time)

What Is Required for Empathic AI? It Depends, and Why That Matters for AI Developers and Users (arXiv)

Bi-Factorial Preference Optimization: Balancing Safety-Helpfulness in Language Models (arXiv)

Monday 26 August (and things I missed)

Conclusions about Neural Network to Brain Alignment are Profoundly Impacted by the Similarity Measure (BiorXiv)

Introduction to Mechanistic Interpretability (BlueDot Impact)

Is Xi Jinping an AI doomer? (The Economist)

Exodus at OpenAI: Nearly half of AGI safety staffers have left, says former researcher (Fortune)

Starmer can unleash innovation if he wants (The Times)

AI and biosecurity: The need for governance (Science)

A data-driven case for productivity optimism (Substack)

Job picks

Some of the interesting (mostly) AI governance roles that I’ve seen advertised in the last week. As usual, it only includes new positions that have been posted since the last TWIE (but lots of the jobs from the previous edition are still open).

Policy Officer, EU AI Office (US)

Research Assistant, Global Catastrophic Risks, Founders Pledge (Remote, US)

Chief Operating Officer, Center for AI Safety (US)

Expression of Interest, Strategic Proposal Writer, Centre for Long-Term Resilience (London)

Product Communications Lead, Anthropic (US)