This week has it all: a recent (I’ll be honest, this came out last week) report from the United Nations, a new paper on the inherent (or not) morality of superintelligence, and an effort to track AI adoption in the United States (and compare it to technologies of yesteryear). As usual, get in touch with me at hp464@cam.ac.uk with things to include, comments or anything else!

Three things

1. UN report calls for additional reports

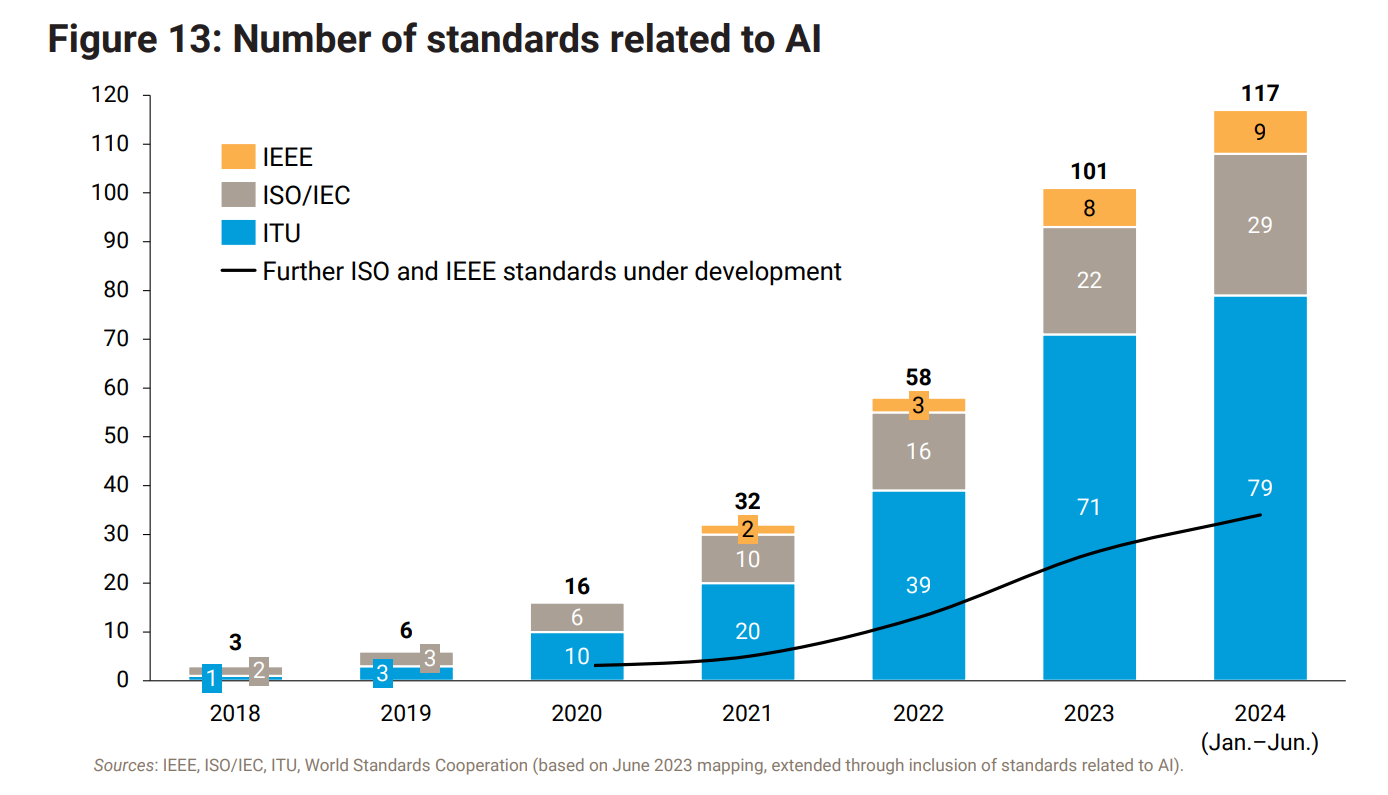

This is technically from last week, but given I wrote about the interim version of this report back in December 2023, it felt fair that we also take a look at the final product. As a reminder, the work comes from the UN’s high level advisory group containing representatives from government, civil society, the private sector, and academia. It basically tries to answer the question: what should the UN do about AI?

The interim report outlined seven types of function that the UN could house, which ranged from those that are more straightforward to implement (e.g. a horizon scanning initiative similar to the IPCC) to those that are trickier (e.g. monitoring mechanisms “inspired by [the] existing practices of the IAEA”).

In what is perhaps not so surprising, the final report advocates for the IPCC type model – though I am unclear how different this process will be from the work currently conducted under the auspices of the international AI safety summit programme kicked off in the UK last year. Whatever the case, we can look forward to more reports from the UN if it adopts the recommendations laid out in the proposal (which, to be fair, also includes more substantial measures like a standards exchange).

The report did, however, leave the door open for different approaches in the future: “If the risks of AI become more serious, and more concentrated, it might become necessary for Member States to consider a more robust international institution with monitoring, reporting, verification, and enforcement powers.” Let’s see, I guess!

2. Does superintelligence have to be moral?

Leonard Dung, a postdoctoral researcher at the Centre for Philosophy and AI Research at the University Erlangen-Nürnberg, asks: “Is superintelligence necessarily moral?” The long and short of it is ‘no, definitely not’.

The piece essentially takes aim at those, like Müller and Cannon, who suppose that the ability for moral thinking is connected to intelligence. The idea is that because a superintelligence is cognitively superior to humans in all domains, it should also be superior in moral reasoning (and that subsequently goals like mass producing paperclips aren’t all that ideal).

In response, the paper argues that this line of thinking conflates the ability to reason morally with the motivation to act morally. Dung argues that a superintelligent AI could have superior moral reasoning capabilities while still lacking any motivation to act on those considerations (if they conflict with its fundamental goals).

To go back to the paperclip-maximising superintelligence, the argument is that—if the goal is to maximize paperclip production at all costs—a superintelligence would have no motive to act according to what is morally best even if it were capable of reasoning about why it would be a bad thing for us if it turned to the solar system into paperclips.

3. AI adoption twice as fast as the internet

Back firmly in the present, a major study from researchers at the Centre for Economic Policy Research, Harvard Kennedy School, and Vanderbilt University that tracked how frequently Americans were using AI. In a survey conducted in August 2024 including 5,014 respondents aged between the ages of 18 and 64, the group found that almost 40% of US adults had used models like GPT-4 or Claude at any point up to the time of the survey.

Of these, about 1/4 said they use AI weekly while 1/10 used the technology every day, with adoption higher among younger, more educated, and higher-income workers (not to mention that men were also more likely to use AI than women). What I found particularly interesting, though, were comparisons between the adoption rate of generative AI to the historical adoption rates of personal computers and the internet:

Large models reached 39.5% adoption after 2 years

The internet reached 20% adoption after 2 years

Personal computers reached 20% adoption after 3 years

So, despite the chorus of people who will tell you it’s all smoke and mirrors, hype, or snake oil, it turns out that large models are pretty useful after all. Clearly, it’s still early days, but I think this research (and other studies like Stripe’s new work on revenue) should do enough to disabuse anyone of the notion that only a tiny group of people actually care about AI.

Best of the rest

Friday 27 September

On Analogies (Substack)

Find Rhinos without Finding Rhinos: Active Learning with Multimodal Imagery of South African Rhino Habitats (arXiv)

AI start-ups generate money faster than past hyped tech companies (FT)

Why Companies "Democratise" Artificial Intelligence: The Case of Open Source Software Donations (arXiv)

OpenAI as we knew it is dead: Why the AI giant went for-profit (Vox)

Thursday 26 September

PM tells US investors "Britain is open for business" as he secured major £10 billion deal to drive growth and create jobs (UK Gov)

Beyond Tax Credits: Pull Mechanisms to Crowd-in Private R&D Investment (UK Day One)

AI crawlers are hammering sites and nearly taking them offline (Fast Company)

Models Can and Should Embrace the Communicative Nature of Human-Generated Math (arXiv)

Judgment of Thoughts: Courtroom of the Binary Logical Reasoning in Large Language Models (arXiv)

Wednesday 25 September

AI Pact Signatories (EU Commission)

Decoding Large-Language Models: A Systematic Overview of Socio-Technical Impacts, Constraints, and Emerging Questions (arXiv)

To What Extent Has Artificial Intelligence Impacted EFL Teaching and Learning? A Systematic Review (Arbitrer)

Towards User-Focused Research in Training Data Attribution for Human-Centered Explainable AI (arXiv)

Setting the AI Agenda -- Evidence from Sweden in the ChatGPT Era (arXiv)

Tuesday 24 September

Use of generative AI in application preparation and assessment (UKRI)

Wrapped in Anansi's Web: Unweaving the Impacts of Generative-AI Personalization and VR Immersion in Oral Storytelling (arXiv)

Artificial Human Intelligence: The role of Humans in the Development of Next Generation AI (arXiv)

Is superintelligence necessarily moral? (OUP)

Lessons for Editors of AI Incidents from the AI Incident Database (arXiv)

Monday 23 September (and things I missed)

Early Insights from Developing Question-Answer Evaluations for Frontier AI (UK AISI)

Open Problems in Technical AI Governance (Stanford)

The Intelligence Age (Sam Altman)

On Those Undefeatable Arguments for AI Doom (1A3ORN)

On speaking to AI (Substack)

Hype, Sustainability, and the Price of the Bigger-is-Better Paradigm in AI (arXiv)

Job picks

Some of the interesting (mostly) AI governance roles that I’ve seen advertised in the last week. As usual, it only includes new positions that have been posted since the last TWIE (but lots of the jobs from the previous edition are still open).

Request for Opportunities, ARIA (UK)

Cooperative AI PhD Fellowship 2025 (Global)

Product Policy Manager, Bio, Chem, and Nuclear Risks, Anthropic, (US)

AI Governance Taskforce, Arcadia Impact (Global)

Principal Product Manager, AGI Policy, Amazon (US)