This week’s edition looks at what the re-election of President Trump means for AI, new research assessing the capabilities gap between open and closed models, and various news about LLMs and the military. The usual plea: if you want to send anything my way, you can do that by emailing me at hp464@cam.ac.uk.

Three things

1. What does a Trump presidency mean for AI?

The big news this week was of course the re-election of President Trump, who looks to have won the presidency, Senate, and (at the time of writing) the House of Representatives. If the GOP executes a clean sweep of the major organs of government, we should expect a fairly muscular programme of regulatory action. For AI, there are two avenues that we should pay attention to.

First up is AI regulation. There’s a fairly decent rundown of the major beats from TechCrunch for anyone interested in a more detailed summary, but in my mind the central question will be whether the Trump Administration carries out its promise to scrap President Biden’s Executive Order on AI, which used compute thresholds to compel developers to implement safety measures on the biggest and most powerful models. The Biden Administration also spun up the US AI Safety Agency (US AISI), which works with its UK counterpart to assess frontier models. If I had to guess, I’d expect the Executive Order to go and for the US AISI to receive little additional funding.

The second major arena is what we can loosely describe as national innovation policy. Whether President Trump will scrap the landmark CHIPS Act, which aimed to reshore huge chunks of the semiconductor supply chain on which AI development ultimately rests, remains an open question. Again, Trump has threatened to scuttle it, but I would be surprised if the Act doesn’t live on in one form or another.

After all, the Trump team seems reasonably aware of the technological, material, and financial forces unleashed by the release of ChatGPT in 2022. Ivanka Trump has talked about Leopold Aschenbrenner’s Situational Awareness, which predicts enormous increases in AI’s energy footprint, and Trump himself mentioned the issue on the campaign trail. For that reason, I expect the loosening of regulations to allow AI labs to build ‘behind the metre’ power generation capacity (primarily via nuclear reactors), and for the most essential provisions in the CHIPS Act to survive.

2. Age gap discourse

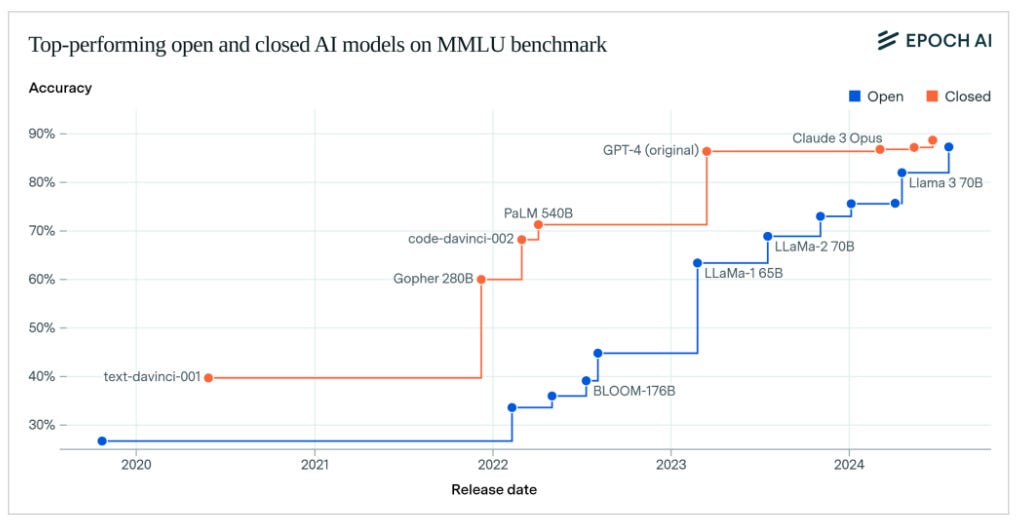

Received wisdom generally states that open source models consistently lag behind their closed counterparts. Now, a new study from the always excellent Epoch AI, puts a figure on the delta between open and closed models. The group reckons that, when it comes to the cutting edge, what's freely available today was likely matched by proprietary systems roughly 12 months ago.

The gap varies across different benchmarks. On GPQA (PhD-level reasoning problems), Meta's Llama 3.1 405B closed the gap to about 5 months behind Claude 3 Opus, while on MMLU (testing general knowledge), the lag has historically been much longer — ranging from 16 to 25 months. What makes this particularly interesting is that we're seeing the gap shrink on newer benchmarks: GPQA, created in 2023, shows a much smaller lag than MMLU, which was created in 2020.

Newer open models tend to be more efficient with their compute. China’s DeepSeek V2, for instance, achieves similar performance to Google's PaLM 2 while using about seven times less computational power. Meta has already announced plans for its new Llama 4 model to use nearly 10 times more compute than its predecessor, with a view to narrowing the gap next year. Whether Meta is successful (and if so, whether the group decides to open source Llama 4) will give us a good sense of whether Epoch’s pattern is still holding up.

3. Model makers defend national security partnerships

This week saw a string of announcements from AI firms about their work with the US Department of Defense. Meta was first out of the blocks, with the firm saying it’s making its Llama models available for US government agencies and contractors in national security — including Amazon, Anduril, Palantir, and Lockheed Martin. The decision came after Reuters reported last week that Chinese research scientists linked to the People’s Liberation Army (PLA) used an older Llama model to develop a tool for defense applications.

As part of the announcement, Scale AI said that it had built Defense Llama, “the LLM purpose-built for American national security”, in collaboration with Meta. There’s not much detail about Defense Llama, other than that it had been specially fine-tuned for defense applications, but Scale AI CEO Alexandr Wang did connect the launch to a recent National Security Memorandum from the Biden Administration. The memorandum, which advocated for greater use of AI by the US Armed Forces, urged the DoD to “act with responsible speed...to make use of AI capabilities in service of the national security mission”.

Just a few days later, frontier model maker Anthropic disclosed a partnership with Amazon and defense technology provider Palantir to allow its Claude AI model to be used by U.S. government intelligence and defence groups. The announcement basically says that Anthropic's Claude models will be available to U.S. spy and defense agencies, running on Amazon's cloud servers and accessed through Palantir's specialised platform. The idea is to help government officials sort through huge amounts of data quickly to make better decisions, which—in an example no doubt picked to dull down the news—Palantir likened to the use of AI in the insurance industry.

Best of the rest

Friday 8 November

Selling Spirals: Avoiding an AI Flash Crash (Lawfare)

The Impact of AI on the Labour Market (TBI)

TSMC to close door on producing advanced AI chips for China from Monday (FT)

Coca-Cola’s Christmas ad to be ‘fully created with AI’ (The Grocer)

Modeling the increase of electronic waste due to generative AI (Nature)

Thursday 7 November

New a16z investment thesis: AI x parenting (X)

Multi-Agents are Social Groups: Investigating Social Influence of Multiple Agents in Human-Agent Interactions (arXiv)

State of AI outtakes #2: embodied AI (Substack)

OpenAI defeats news outlets' copyright lawsuit over AI training, for now (Reuters)

AI firms should not solve ethical problems: ElevenLabs safety chief (UKTN)

Wednesday 6 November

What Trump means for AI safety (Substack)

Black Forest Labs Flux upgrade (X)

UK government press release on AI assurance and UK AISI (UK Gov)

The bread and butter problem in AI policy (Substack)

Language Models are Hidden Reasoners: Unlocking Latent Reasoning Capabilities via Self-Rewarding (arXiv)

Tuesday 5 November

Bounty programme for novel evaluations and agent scaffolding (UK AISI)

The Gap Between Open and Closed AI Models Might Be Shrinking. Here’s Why That Matters (TIME)

AI-generated images threaten science — here’s how researchers hope to spot them (Nature)

ChatGPT in Research and Education: Exploring Benefits and Threats (arXiv)

Monday 4 November (and things I missed)

Can LLMs make trade-offs involving stipulated pain and pleasure states? (arXiv)

Imagining and building wise machines: The centrality of AI metacognition (arXiv)

UK defence and dynamism (Substack)

Thread on the philosophy of the transformer (X)

Targeted Manipulation and Deception Emerge when Optimizing LLMs for User Feedback (arXiv)

Job picks

Some of the interesting (mostly) AI governance roles that I’ve seen advertised in the last week. As usual, it only includes new positions that have been posted since the last TWIE (but lots of the jobs from the previous edition are still open).

Program Manager, AI and Society Stanford University, Human-Centered Artificial Intelligence Institute (San Francisco)

Front of House Coordinator, London Initiative for Safe AI (London)

Operations Generalist, FAR AI (San Francisco)

Operations Generalist, Apollo Research (London)

Policy Researcher, AI and Labour Markets, Interface (Berlin)

Interesting how Silicon Valley followed Google's lead with the Maven project. Now a few years later it's totally normal for our best funded research labs to contribute to national defense and military Tech.

Google is an investor in Anthropic that recently decided to work with Palantir in national defense. Palantir get so much of its revenue from national defense contracts it's the only reason it's even profitable. Now it's officially fashionable to use AI research for military ends.