Another Saturday, another newsletter. In this edition, I looked at misaligned expectations between experts and the public about what AI will do for us, a new model for generating worlds from Google DeepMind, and a study about AI and loneliness. As always: if you want to send anything my way, you can do that by emailing me at hp464@cam.ac.uk.

Three things

1. Great expectations

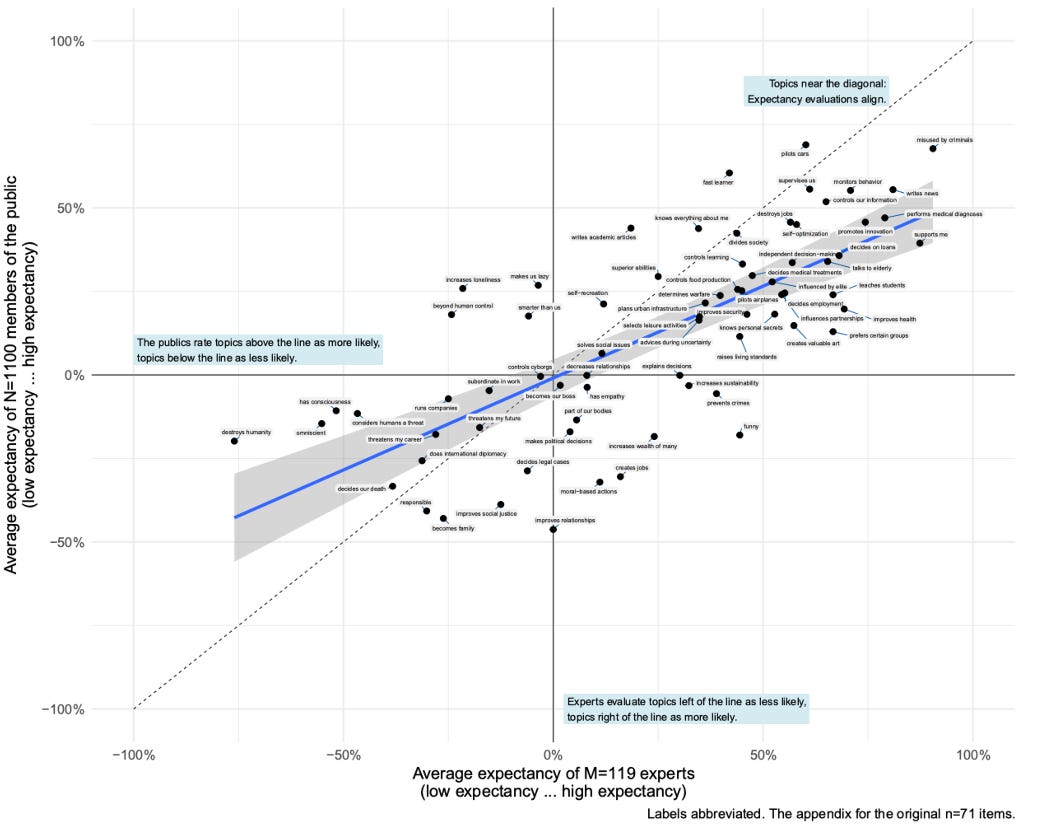

Researchers from Aachen University in Germany released a new study about how different groups have their own ideas about what AI can and can’t do. The study, which involved 119 AI experts—defined as ‘people shaping AI development’—and 1,100 members of the public, found that experts generally see AI as more beneficial and less risky than the general population does.

Across 71 different scenarios covering healthcare (e.g. ‘AI decides medical treatments’), social utility (‘AI increases personal loneliness’), and work (e.g. ‘AI creates many jobs’), the paper found that experts expected higher probabilities of positive outcomes and perceived lower risks compared to the public. When weighing risks against benefits, experts gave risks only about one-third the weight of benefits, while the public weighted risks at about half the importance of benefits.

The researchers warn this misalignment—between those developing AI and those who will use it—could lead to the creation of ‘Procrustean’ AI in reference to the chop-happy Procrustes from Greek mythology who forced travelers to fit his bed rather than providing correctly sized beds (at sword point). The idea, which I think is probably a bit too harsh, is that failing to close the gap between expectations and reality may force people to conform to systems — rather than designing systems that adapt to human needs.

2. The week of the world models

This was the week of the world model, a predictive system that can simulate or ‘imagine’ what will happen next in an environment based on specific actions. Meta kicked things off with the release of a Navigation World Model (NWM), a controllable video generation model that looks at a video showing how someone or something moves through an environment and uses this information to generate new video showing what would happen if you took certain actions (turning left, going straight, etc.)

Next up is a paper from researchers at the University of Hong Kong and University of Waterloo, which details another world simulator that authors refer to as, uhm, The Matrix. This paper, which opens with a quote from the 1999 film, describes a system capable of generating ‘infinitely long 720p high fidelity real-scene video streams.’ Trained on data from games like Forza Horizon 5 and Cyberpunk 2077, the model basically generates high-quality video that responds in real-time to keyboard controls (sort of like making a video game on the fly).

This is probably the best way to describe the final world model released this week from Google DeepMind. Called Genie-2, like The Matrix, the model allows you to create new videos using text-based prompts or by inputting images. Imagine being able to drive a car through any environment you can describe, even ones that weren't in the original training data, or making your way through cityscapes, jungles, or pretty much anything you can imagine.

3. If Eleanor Rigby had met ChatGPT

Finally, a new paper from Microsoft Research explores how lonely people are increasingly turning to ChatGPT as a companion. The study, which analysed nearly 80,000 conversations with the model, found that 8% of non-task-oriented chats showed signs of loneliness (which they determined by picking up on cues within the conversation). They found that these users were having significantly longer conversations (6 turns versus the typical 2) and often seeking what the author characterises as emotional support.

While ChatGPT proved capable of providing empathetic responses in general conversations, the research did identify some concerning patterns. Lonely users were more than twice as likely to include toxic content in their messages, with this content being directed at women 22 times more frequently than at men. The paper also found ChatGPT struggling with high-stakes situations like discussions of trauma or suicidal thoughts, where responses often defaulted to generic suggestions about seeking therapy without providing specific resources or emergency contacts.

The findings remind us that, even though AI chatbots aren't designed or marketed as mental health resources, people experiencing loneliness will likely turn to them anyway given their accessibility and human-like interactions. The authors argue this raises important questions about ethical deployment and regulation of these systems, particularly when dealing with vulnerable users. They conclude by advocating for existing recommendations from the US Surgeon General for technology companies to prioritise designing systems that “nurture healthy connections” through a combination of safeguarding, transparency, and product design measures.

Best of the rest

Friday 6 November

Bio-defense(less)? (Substack)

Inside Britain’s plan to save the world from runaway AI (Politico)

Street talk #1 - 6 December 2024 (Substack)

Apollo Research evals of OpenAI’s o1 model (X)

Introducing ChatGPT Pro (OpenAI)

The fake AI slowdown (Vox)

Thursday 5 December

Music sector workers to lose nearly a quarter of income to AI in next four years, global study finds (Guardian)

DeepMind AI weather forecaster beats world-class system (Nature)

The future of AI agents: highly lucrative but surprisingly boring (FT)

Musk's xAI plans massive expansion of AI supercomputer in Memphis (Reuters)

Genie 2: A large-scale foundation world model (Google DeepMind)

Wednesday 4 December

Toward AI-Resilient Screening of Nucleic Acid Synthesis Orders: Process, Results, and Recommendations (BioArXiv)

Mastering Board Games by External and Internal Planning with Language Models (Google DeepMind)

Why You Should Care About AI Agents (FLI)

Late Takes on OpenAI o1 (Sorta Insightful)

Breakthroughs of 2024 (X)

Tuesday 3 December

Details about Anthropic’s fellows programme (X)

Introducing Amazon Nova, our new generation of foundation models (Amazon)

What is the Future of AI in Mathematics? Interviews with Leading Mathematician (Epoch AI)

Anduril Partners with OpenAI to Advance U.S. Artificial Intelligence Leadership and Protect U.S. and Allied Forces (Anduril)

The Reality of AI and Biorisk (arXiv)

Monday 2 December (and things I missed)

Revitalising Nuclear: The UK Can Power AI and Lead the Clean-Energy Transition (TBI)

Do Large Language Models Perform Latent Multi-Hop Reasoning without Exploiting Shortcuts? (arXiv)

Your guide to AI: December 2024 (Substack)

US hits China’s chip industry with new export controls (FT)

LLMs as Research Tools: A Large Scale Survey of Researchers' Usage and Perceptions (arXiv)

Why ‘open’ AI systems are actually closed, and why this matters (Nature)

Is Your LLM Secretly a World Model of the Internet? (arXiv)

Letter to the attorney general about AI (Ted Cruz)

Job picks

Some of the interesting (mostly) AI governance-focused roles that I’ve seen advertised in the last week. As usual, it only includes new positions that have been posted since the last TWIE (but lots of the jobs from the previous edition are still open).

AI Safety Fellow, Anthropic (London)

Head of People, UK Government, AI Safety Institute (London)

Expression of Interest, European Union, European AI Office (Various, Europe)

Content Writer, Freelance, CivAI (Remote)

External Safety Testing Manager, Google DeepMind (London)