Friday nights were game night for John Holland. Once every fortnight, Holland and a small group of young IBM researchers met to play Kriegspiel, poker, and Go. It was the summer of 1951 in the city of Poughkeepsie in New York State, and IBM was racing to build the first commercial programmed computer.

By day, the researchers worked to figure out how circuits, memory, and instruction sets worked together to support machine-language programming in the IBM 701. By night, when they weren’t trading cards, they tested it. Some were showing the machine how to play checkers. Holland, fresh from an undergraduate at MIT, was teaching it to learn.

John Holland grew up amongst the quiet plains and soy‑bean factories of rural Indiana. Born in 1929 to a businessman father and an adventurous mother who learned to fly in her forties, Holland was encouraged to try anything that took his fancy.

A chemistry set sparked his early love of science, and the young Holland eventually found his way to MIT. There, as an undergraduate physics major, he undertook his bachelor’s thesis on Whirlwind, the university’s digital computer built for missile detection.

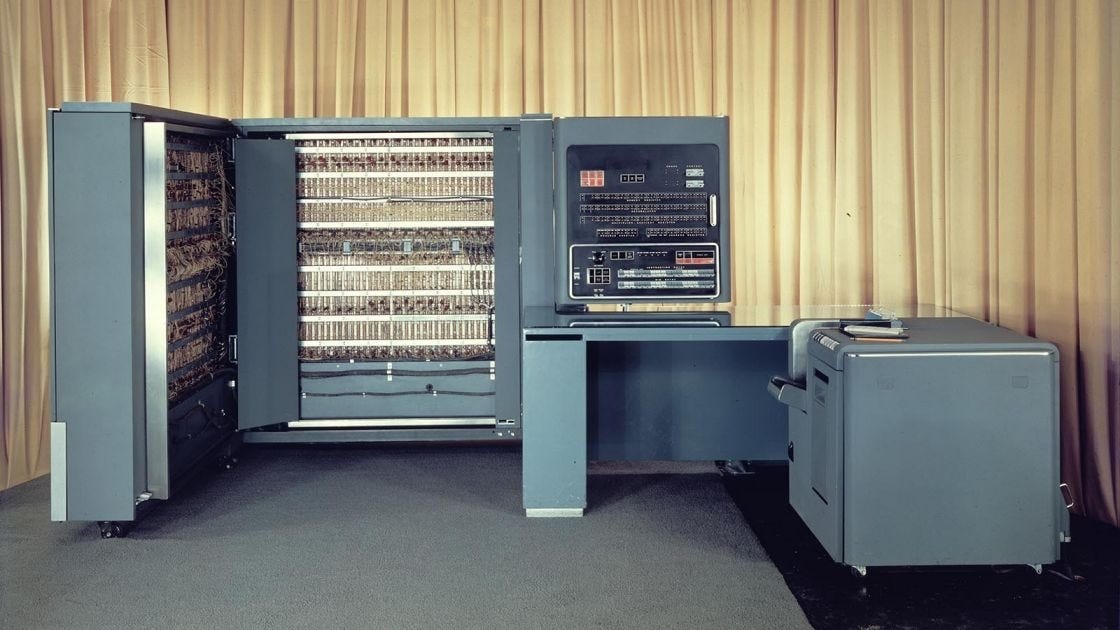

After MIT, Holland took a job at IBM’s Poughkeepsie lab in 1950. There he joined the small group building the IBM 701, the company’s first commercial electronic computer. Nicknamed the Defense Calculator, the 701 was a room-sized behemoth made up of tubes and magnetic drums.

By 1959 Holland had earned what was essentially Michigan’s first computer-science PhD, with a dissertation studying feedback loops in early neural networks. Spurred by his encounters with IBM 701 and buoyed by his academic work, Holland said he began to think ‘about genetics and adaptive systems’.

A key moment came in 1955 when Holland stumbled on a book called On The Genetical Theory of Natural Selection written by Ronald Fisher. Fisher, generally regarded as the great figure in the history of statistics, set out to show how laws of inheritance could underpin natural selection. He introduced the idea that evolution is about changes in gene frequencies within populations, and formulated the famous ‘fundamental theorem of natural selection,’ which roughly states that a population can only get fitter as fast as there’s useful genetic variety to work with.

In Fisher’s equations Holland saw a template for an algorithm that could encode potential solutions as ‘genes’ to let the fitter ones reproduce and occasionally mutate. The combination of mutation and reward meant he just needed to define a target and watch the algorithm adapt its way to an optimal solution.

In practice, that meant treating possible solutions like digital organisms. The system evaluated each string of code according to how well it performed, then selected the stronger ones to combine and reproduce. Their code was mixed, mutated, and eventually used to create a new generation of candidate solutions.

The process has an advantage over simpler search methods. Hill climbing algorithms improve a single solution piece by piece but get stuck on local peaks. It’s easy to produce a good answer but hard to produce a great one. Genetic algorithms avoid this fate by maintaining a diverse population of solutions. Through mutation and recombination, they can occasionally make bold jumps into less promising territory that turn out to lead somewhere better.

Holland published the first comprehensive account of genetic algorithms in his 1975 book Adaptation in Natural and Artificial Systems. The work aimed to lay a general ‘mathematical theory of adaptation’ that used genetic algorithms as a central tool to solve complex optimisation problems.

Genetic algorithms are good at things like shaping an aircraft wing, designing an electronic circuit, or routing a network. They work well for tasks for which you can’t easily design a solution from first principles, but you can test how well any given attempt performs.

In machine learning, genetic algorithms are used when there’s no clear way to calculate the best setup (like deciding which features to include in a model or tuning parameters that control how it learns). If you can’t derive the answer but you can run it and see how well it works, it’s a problem that genetic algorithms might be good at.

Emergence

Holland extended his framework to what he called Learning Classifier Systems (LCS) in the 1980s. These were evolving collections of simple if-then rules that compete, cooperate, and adapt based on feedback. Instead of solving a fixed problem, they learn how to behave in changing environments. One system learned to play checkers. Another optimised industrial pipeline flow in real time. The idea is that they weren’t being pre-programmed, but they could learn which rules worked and reinforce them over time.

It was a curious marriage. The systems used if-then rules, the bread and butter of symbolic AI, but fused them with learning — the hallmark of connectionist models like neural networks. In most of the AI industry at the time, these approaches were seen as opposites (and for the most part still are today). Rules are explicit, logical, and human-readable. Learning is messy, statistical, and ambiguous.

But Holland didn’t see a contradiction. He saw a system where rules could be treated like genes. They were hypotheses to be tested, combined, and evolved. Each rule was part of a population and scored according to how well it performed. Useful ones were strengthened and reused, while weaker ones were discarded and replaced by new variations.

In later years Holland became a leading voice in the science of complex adaptive systems. He co-founded the Santa Fe Institute, an interdisciplinary hub for studying complexity. His philosophy was consistent: intelligence emerges from the interaction of many simple parts, not from a single clever algorithm.

Holland viewed any group of interacting, adaptive entities—be it neurons in a brain, ants in a colony, or humans in a city—as essentially the same phenomenon. Each a form of computation with emergent collective behaviour. In his book Emergence, for example, he described how brain functions or market behaviour could not be understood by simply summing up individual units; rather, the nonlinear interactions made the aggregate far more complex than the parts.

Genetic algorithms represent a philosophy of intelligence. Holland believed that intelligence was an emergent property that bubbled up from competition. That idea is now at the core of the most successful approaches to AI. While many may not use genetic algorithms, they do embody Holland’s deeper lesson: intelligence emerges from systems that change.

Tidbits about AI history are such a pleasure to read. Such a valuable niche.

Nice. Indeed, I have his book and even briefly discussed learning classifier systems fifteen years ago in my keynotes, way before deep learning entered the stage. Holland was a visionary.